Hello everyone,

I’m trying to start a stream on Wowza programmaticaly by feeding the stream myself with packets comming from GStreamer.

Wowza provides an API called “Publisher API” : (https://www.wowza.com/resources/serverapi/4.5.0/com/wowza/wms/stream/publish/Publisher.html)

it enables you to publish a stream on Wowza with Java Code by feeding the stream with byte[] (packets).

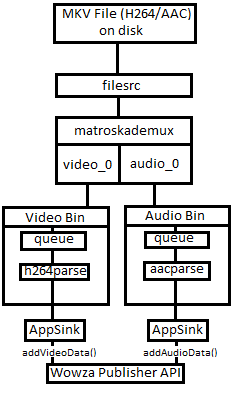

What I’m trying to do is to read a mkv file on disk with gstreamer and send packets to Wowza using this API.

My Problem is that Wowza seems to doesn’t recognize what’s inside the packet I’m sending, see logs :

Audio codec:PCM_BE isCompatible:false

Video codec:UNKNOWN[0] isCompatible:false

For information my video and audio packets are in h264 and aac codecs. it is 2 format that Wowza knows to read.

I Think, maybe the packets coming from GStreamer can’t be sent to Wowza directly without some king of transformation or adaptation.

On the Publisher API page, there is the format of the packets for aac and h264 that is described. I don’t know if GStreamer is already sending me the video and audio packets

respecting this format or if I have to do something myself with gstreamer packets before giving it to Wowza.

For more detail, this is the GStreamer Pipeline I’m using :

I’ve tryed with and without h264parse & aacparse and it is the same result.

This is my code that read gstreamer video/audio buffer and transmit it to Wowza using the Publisher API. I think there is something to work here to make this works :

private class VIDEO_BUFFER_LISTENER implements AppSink.NEW_BUFFER{

@Override

public void newBuffer(AppSink elem) {

Buffer buffer = elem.pullBuffer();

ByteBuffer bb =buffer.getByteBuffer();

byte[] bytes = toArray(bb);

AMFPacket amfPacket = new AMFPacket(IVHost.CONTENTTYPE_VIDEO,1,bytes);

publisher.addVideoData(amfPacket.getData(), amfPacket.getSize(), amfPacket.getTimecode());

buffer.dispose();

}

}

private class AUDIO_BUFFER_LISTENER implements AppSink.NEW_BUFFER{

@Override

public void newBuffer(AppSink elem) {

Buffer buffer = elem.pullBuffer();

ByteBuffer bb =buffer.getByteBuffer();

byte[] bytes = toArray(bb);

AMFPacket amfPacket = new AMFPacket(IVHost.CONTENTTYPE_AUDIO,1,bytes);

publisher.addAudioData(amfPacket.getData(), amfPacket.getSize(), amfPacket.getTimecode());

buffer.dispose();

}

}

private byte[] toArray(ByteBuffer bb){

byte[] b = new byte[bb.remaining()];

bb.get(b);

return b;

}

This is the format described by wowza on the page https://www.wowza.com/resources/serverapi/4.5.0/com/wowza/wms/stream/publish/Publisher.html:

Basic packet format:

Audio:

AAC

[1-byte header]

[1-byte codec config indicator (1 - audio data, 0 - codec config packet)]

[n-bytes audio content or codec config data]

All others

[1-byte header]

[n-bytes audio content]

Below is the bit

layout of the header byte of data (table goes from least significant bit to most significant bit):

1 bit Number of channels:

0 mono

1 stereo

1 bit Sample size:

0 8 bits per sample

1 16 bits per sample

2 bits Sample rate:

0 special or 8KHz

1 11KHz

2 22KHz

3 44KHz

4 bits Audio type:

0 PCM (big endian)

1 PCM (swf - ADPCM)

2 MP3

3 PCM (little endian)

4 Nelly Moser ASAO 16KHz Mono

5 Nelly Moser ASAO 8KHz Mono

6 Nelly Moser ASAO

7 G.711 ALaw

8 G.711 MULaw

9 Reserved

a AAC

b Speex

f MP3 8Khz

Note: For AAC the codec config data is generally a two byte packet that describes the stream. It must

be published first. Here is the basic code to fill in the codec config data.

AACFrame frame = new AACFrame();

int sampleRate = 22100;

int channels = 2;

frame.setSampleRate(sampleRate);

frame.setRateIndex(AACUtils.sampleRateToIndex(sampleRate));

frame.setChannels(channels);

frame.setChannelIndex(AACUtils.channelCountToIndex(sampleRate));

byte[] codecConfig = new byte[2];

AACUtils.encodeAACCodecConfig(frame, codecConfig, 0);

Note: For AAC the header byte is always 0xaf

Note: For Speex the audio data must be encoded as 16000Hz wide band

Video:

H.264

[1-byte header]

[1-byte codec config indicator (1 - video data, 0 - codec config packet)]

[3-byte time difference between dts and pts in milliseconds]

[n-bytes video content or codec config data]

All others

[1-byte header]

[n-bytes audio content]

Below is the bit layout of the header byte of data (table goes from least significant bit to most significant bit):

4 bits Video type:

2 Sorenson Spark (H.263)

3 Screen

4 On2 VP6

5 On2 VP6A

6 Screen2

7 H.264

2 bit Frame type:

1 K frame (key frame)

2 P frame

3 B frame

Note: H.264 codec config data is the same as the AVCc packet in a QuickTime container.

Note: All timecode data is in milliseconds

Do you know what is the problem in my code and what do I have to do to make this work ?